NVIDIA is making significant strides in optimizing financial data workflows through its innovative AI Model Distillation. This advancement targets the integration of large language models (LLMs) in quantitative finance, particularly for tasks such as alpha generation, automated report analysis, and risk prediction.

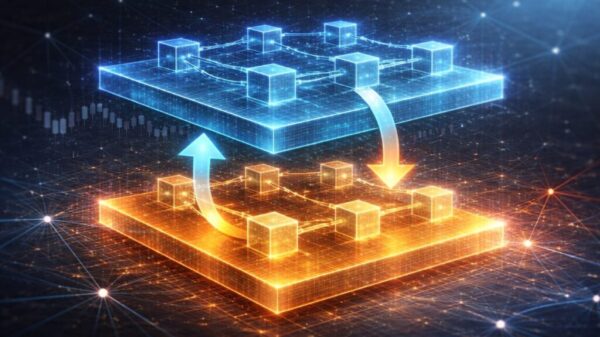

Despite the potential of these models, their adoption has been hampered by challenges including high costs, latency issues, and complex integration processes. To address these obstacles, NVIDIA has introduced AI Model Distillation, a technique that efficiently transfers knowledge from a larger, more powerful model, referred to as the “teacher,” to a smaller, streamlined “student” model. This approach not only conserves computational resources but also preserves accuracy, making it particularly suitable for deployment in edge or hybrid environments.

The significance of this process becomes apparent in the fast-paced world of financial markets, where the need for continuous model refinement and timely deployment is critical. The NVIDIA Data Flywheel Blueprint plays a pivotal role in orchestrating this process. It acts as a centralized control plane that streamlines interactions with NVIDIA NeMo microservices. This blueprint facilitates dynamic orchestration for both experimentation and production workloads, enhancing the scalability and observability of financial AI models.

By utilizing NVIDIA”s suite of tools, financial institutions can effectively distill large LLMs into efficient, domain-specific variants. This transformation yields reductions in latency and inference costs while maintaining high accuracy levels, supporting rapid iterations and evaluations of trading signals. Additionally, these advancements ensure compliance with financial data governance standards, accommodating both on-premises and hybrid cloud deployments.

Initial results from implementing AI Model Distillation are promising. Observations indicate that larger student models have a greater capacity to learn from their teacher counterparts, resulting in enhanced accuracy as data size increases. This enables financial institutions to deploy lightweight, specialized models directly within their research pipelines, significantly improving decision-making processes related to feature engineering and risk management.

For further insights into this transformative approach, visit the NVIDIA blog.